AI privateness issues are rising as rising applied sciences like generative AI change into extra built-in into each day life. Enterprise leaders coping with AI and privateness points should perceive the expertise’s nice potential whilst they guard in opposition to the accompanying privateness and moral points.

This information explores a few of the commonest AI and privateness issues that companies face. Moreover, it identifies potential options and finest practices organizations can pursue to realize higher outcomes for his or her prospects, their popularity, and their backside line.

KEY TAKEAWAYS

- •Clients demand information privateness, however some common AI applied sciences gather and use private information in unauthorized or unethical methods.

- •AI is at the moment a largely unregulated enterprise expertise, which ends up in a wide range of privateness issues; extra laws are anticipated to move into regulation sooner or later.

- •What you are promoting’s strict adherence to information, safety, and regulatory finest practices can shield your prospects in opposition to AI privateness points.

- •In the end, it’s a enterprise chief’s accountability to carry each their chosen AI distributors and their staff accountable for AI and information privateness.

- •Whereas many AI distributors are working to enhance their fashions’ transparency and method to private information, enterprise customers must scour their present insurance policies and techniques earlier than inputting delicate information into any third-party system.

TABLE OF CONTENTS

Toggle

- Main Points with AI and Privateness

- How Information Assortment Creates AI Privateness Points

- Rising Developments in AI and Privateness

- Actual-World Examples of AI and Privateness Points

- Greatest Practices for Managing AI and Privateness Points

- Backside Line: Addressing AI and Privateness Points Is Important

Main Points with AI and Privateness

On condition that the function of synthetic intelligence has grown so quickly, it’s not stunning that points like unauthorized incorporation of consumer information, unclear information insurance policies, and restricted regulatory safeguards have created important points with AI and privateness.

Unauthorized Incorporation of Consumer Information

When customers of AI fashions enter their very own information within the type of queries, there’s the chance that this information will change into a part of the mannequin’s future coaching dataset. When this occurs, this information can present up as outputs for different customers’ queries, which is a very massive difficulty if customers have enter delicate information into the system.

In a now-famous instance, three completely different Samsung staff leaked delicate firm data to ChatGPT that would now presumably be a part of ChatGPT’s coaching information. Many distributors, together with OpenAI, are cracking down on how consumer inputs are integrated into future coaching. However there’s nonetheless no assure that delicate information will stay safe and outdoors of future coaching units.

Unregulated Utilization of Biometric Information

A rising variety of private units use facial recognition, fingerprints, voice recognition, and different biometric information safety as a substitute of extra conventional types of identification verification. Public surveillance units are additionally starting to make use of AI to scan for biometric information so people might be recognized shortly.

Whereas these new biometric safety instruments are extremely handy, there’s restricted regulation targeted on how AI firms can use this information as soon as it’s collected. In lots of circumstances, people don’t even know that their biometric information has been collected, a lot much less that it’s being saved and used for different functions.

Covert Metadata Assortment Practices

When a consumer interacts with an advert, a TikTok or different social media video, or just about any internet property, metadata from that interplay—in addition to the particular person’s search historical past and pursuits—might be saved for extra exact content material concentrating on sooner or later.

This methodology of metadata assortment has been occurring for years, however with the assistance of AI, extra of that information might be collected and interpreted at scale, making it potential for tech firms to additional goal their messages at customers with out their data of the way it works. Whereas most consumer websites have insurance policies that point out these information assortment practices and/or require customers to choose in, it’s talked about solely briefly and within the midst of different coverage textual content, so most customers don’t notice what they’ve agreed to. This veiled metadata assortment settlement topics customers and every thing on their cellular units to scrutiny.

Restricted Constructed-In Safety Options for AI Fashions

Whereas some AI distributors could select to construct baseline cybersecurity options and protections into their fashions, many AI fashions do not need native cybersecurity safeguards in place. Even the AI applied sciences that do have fundamental safeguards not often include complete cybersecurity protections. It’s because taking the time to create a safer and safer mannequin can value AI builders considerably, each in time to market and total improvement finances.

Regardless of the purpose, AI builders’ restricted give attention to safety and information safety makes it a lot simpler for unauthorized customers and bad-faith actors to entry and use different customers’ information, together with personally identifiable data (PII).

Prolonged and Unclear Information Storage Insurance policies

Few AI distributors are clear about how lengthy, the place, and why they retailer consumer information. The distributors who are sometimes retailer information for prolonged intervals of time, or use it in ways in which clearly don’t prioritize privateness.

For instance, OpenAI’s privateness coverage says it could actually “present Private Info to distributors and repair suppliers, together with suppliers of internet hosting companies, customer support distributors, cloud companies, e mail communication software program, internet analytics companies, and different data expertise suppliers, amongst others. Pursuant to our directions, these events will entry, course of, or retailer Private Info solely in the midst of performing their duties to us.”

On this case, a number of kinds of firms can achieve entry to your ChatGPT information for varied causes as decided by OpenAI. It’s particularly regarding that “amongst others” is a class of distributors that may gather and retailer your information, as there’s no details about what these distributors do or how they may select to make use of or retailer your information.

OpenAI’s coverage offers extra details about what information is often saved and what your privateness rights are as a shopper. You possibly can entry your information and evaluate some details about the way it’s processed, delete your information from OpenAI information, prohibit or withdraw data processing consent, and/or submit a proper criticism to OpenAI or native information safety authorities.

This extra complete method to information privateness is a step in the proper route, however the coverage nonetheless comprises sure opacities and regarding components, particularly for Free and Plus plan customers who’ve restricted management over or visibility into how their information is used.

Little Regard for Copyright and IP Legal guidelines

AI fashions pull coaching information from all corners of the online. Sadly, many AI distributors both don’t notice or don’t care after they use another person’s copyrighted paintings, content material, or different mental property with out their consent.

Main authorized battles have targeted on AI picture era distributors like Stability AI, Midjourney, DeviantArt, and Runway AI. It’s alleged that a number of of those instruments scraped artists’ copyrighted photographs from the web with out permission. Among the distributors defended their motion by citing a scarcity of legal guidelines that forestall them from following this course of for AI coaching.

The issues of utilizing unauthorized copyrighted merchandise and IP develop a lot worse as AI fashions are skilled, retrained, and fine-tuned with this information over time. A lot of immediately’s AI fashions are so complicated that even their builders can’t confidently say what information is getting used, the place it got here from, and who has entry to it.

Restricted Regulatory Safeguards

Some nations and regulatory our bodies are engaged on AI laws and secure use insurance policies, however no overarching requirements are formally in place to carry AI distributors accountable for the way they construct and use synthetic intelligence instruments. The proposed regulation closest to turning into regulation is the EU AI Act, anticipated to be printed within the Official Journal of the European Union in summer season of 2024. Some elements of the regulation will take so long as three years to change into enforceable.

With such restricted regulation, quite a few AI distributors have come below hearth for IP violations and opaque coaching and information assortment processes, however little has come from these allegations. Normally, AI distributors resolve their very own information storage, cybersecurity, and consumer guidelines with out interference.

How Information Assortment Creates AI Privateness Points

Sadly, the entire quantity and number of ways in which information is collected all however ensures that this information will discover its approach into some irresponsible makes use of. From Net scraping to biometric expertise to IoT sensors, trendy life is actually lived in service of knowledge assortment efforts.

Net Scraping Harvests a Large Internet

As a result of internet scraping and crawling require no particular permissions and allow distributors to gather huge quantities of various information, AI instruments usually depend on these practices to shortly construct coaching datasets at scale. Content material is scraped from publicly accessible sources on the web, together with third-party web sites, wikis, digital libraries, and extra. Lately, consumer metadata can be more and more pulled from advertising and promoting datasets and web sites with information about focused audiences and what they interact with most.

Consumer Queries in AI Fashions Retain Information

When a consumer inputs a query or different information into an AI mannequin, most AI fashions retailer that information for at the least a number of days. Whereas that information could by no means be used for anything, many synthetic intelligence instruments gather that information and maintain onto it for future coaching functions.

Biometric Know-how Can Be Intrusive

Surveillance tools—together with safety cameras, facial and fingerprint scanners, and microphones—can all be used to gather biometric information and establish people with out their data or consent. State by state, guidelines are getting stricter within the U.S. relating to how clear firms must be when utilizing this type of expertise. Nonetheless, for probably the most half, they’ll gather this information, retailer it, and use it with out asking prospects for permission.

IoT Sensors and Gadgets Are All the time On

Web of Issues (IoT) sensors and edge computing programs gather huge quantities of moment-by-moment information and course of that information close by to finish bigger and faster computational duties. AI software program usually takes benefit of an IoT system’s detailed database and collects its related information by strategies like information studying, information ingestion, safe IoT protocols and gateways, and APIs.

APIs Interface With Many Functions

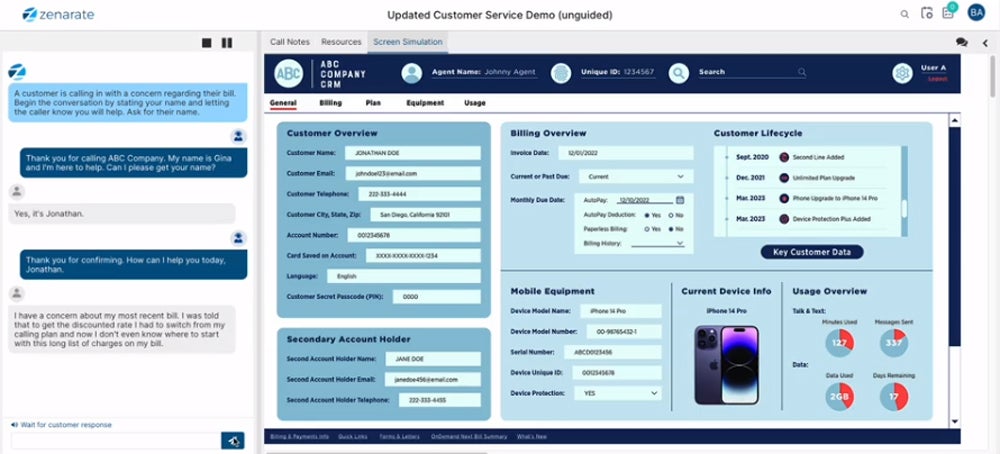

APIs give customers an interface with completely different sorts of enterprise software program to allow them to simply gather and combine completely different varieties of knowledge for AI evaluation and coaching. With the proper API and setup, customers can gather information from CRMs, databases, information warehouses, and each cloud-based and on-premises programs. Given how few customers take note of the information storage and use insurance policies their software program platforms observe, it’s doubtless many customers have had their information collected and utilized to completely different AI use circumstances with out their data.

Public Data Are Straightforward Accessed

Whether or not information are digitized or not, public information are sometimes collected and integrated into AI coaching units. Details about public firms, present and historic occasions, prison and immigration information, and different public data might be collected with no prior authorization required.

Consumer Surveys Drives Personalization

Although this information assortment methodology is extra old school, utilizing surveys and questionnaires are nonetheless a tried-and-true approach that AI distributors gather information from their customers. Customers could reply questions on what content material they’re most serious about, what they need assistance with, how their most up-to-date expertise with a services or products was, or another query that provides the AI a greater concept about easy methods to personalize interactions.

Rising Developments in AI and Privateness

As a result of the AI panorama is evolving so quickly, the rising tendencies shaping AI and privateness points are additionally altering at a exceptional tempo. Among the many main tendencies are main advances in AI expertise itself, the rise of laws, and the function of public opinion on AI’s development.

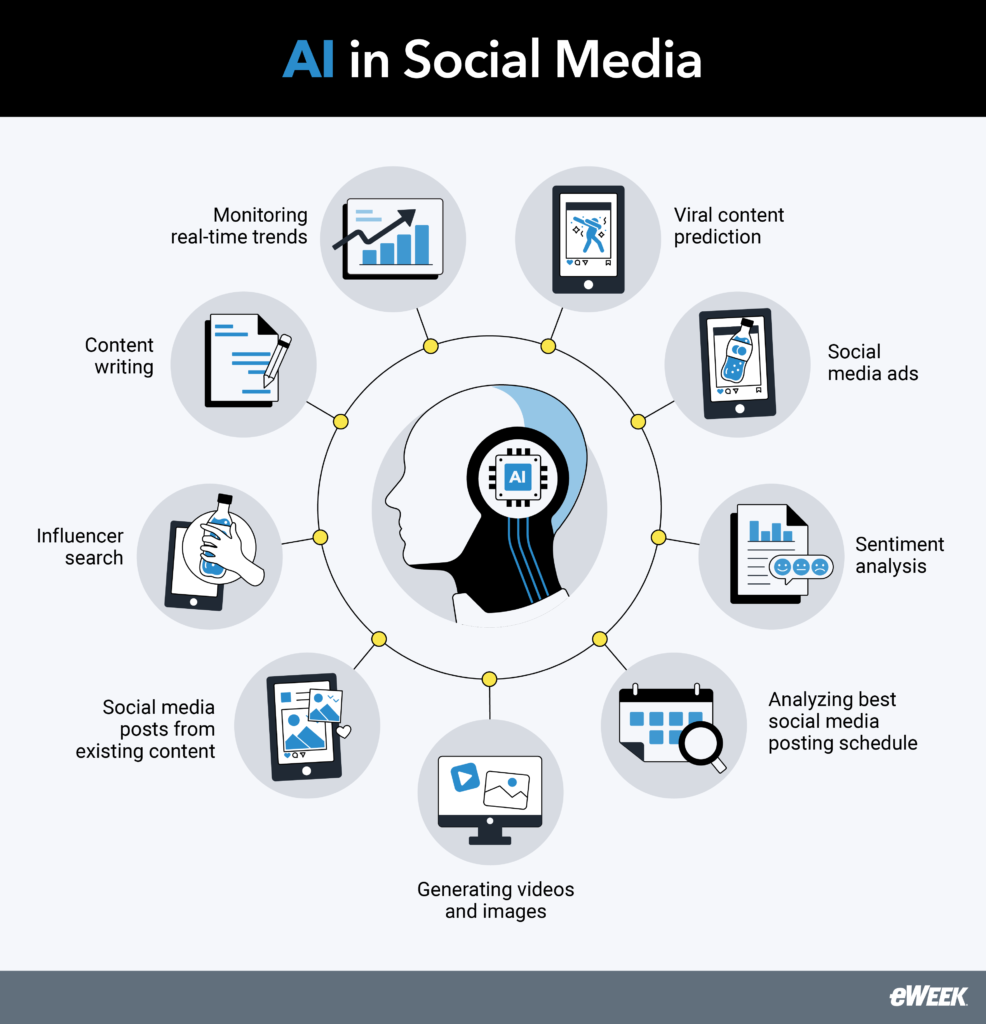

Developments in AI Applied sciences

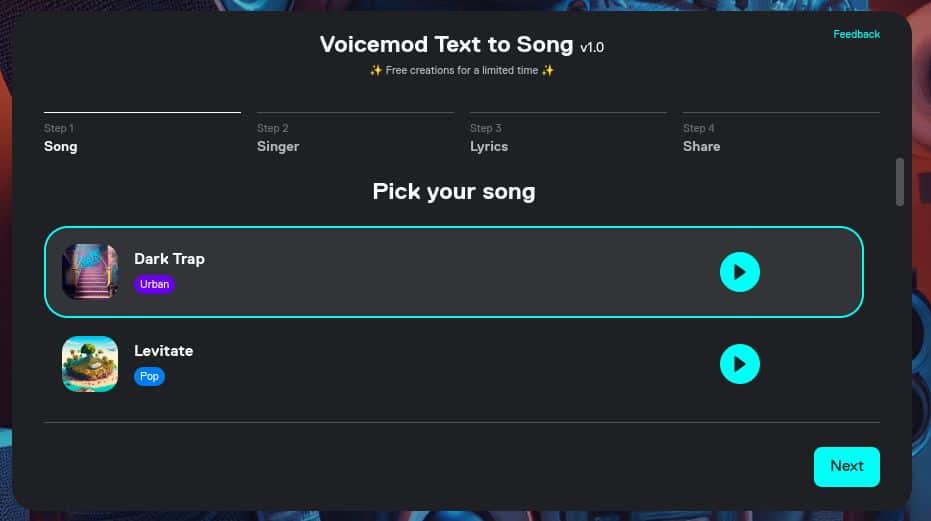

AI applied sciences have exploded by way of expertise sophistication, use circumstances, and public curiosity and data. This development has occurred with extra conventional AI and machine studying applied sciences but in addition with generative AI.

Generative AI’s massive language fashions (LLMs) and different massive-scale AI applied sciences are skilled on extremely massive datasets, together with web information and a few extra personal or proprietary datasets. Whereas the information assortment and coaching methodologies have improved, AI distributors and their fashions usually aren’t clear of their coaching or the algorithmic processes they use to generate solutions.

To deal with this difficulty, many generative AI firms particularly have up to date their privateness insurance policies and their information assortment and storage requirements. Others, corresponding to Anthropic and Google, have labored to develop and launch clear analysis that illustrates how they’re working to include extra explainable AI practices into their AI fashions, which improves transparency and moral information utilization throughout the board.

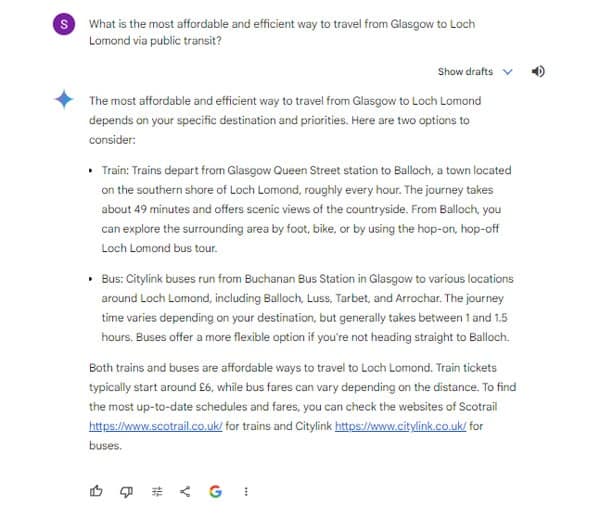

Google particularly has grown its following by prioritizing consumer suggestions, as famous by the consumer suggestions icons in Google Gemini that permit enter—together with the power to report privateness issues.

Google particularly has grown its following by prioritizing consumer suggestions, as famous by the consumer suggestions icons in Google Gemini that permit enter—together with the power to report privateness issues.

Affect of AI on Privateness Legal guidelines and Laws

Most privateness legal guidelines and laws don’t but instantly handle AI and the way it may be used or how information can be utilized in AI fashions. Because of this, AI firms have had a number of freedom to do what they need. This has led to moral dilemmas like stolen IP, deepfakes, delicate information uncovered in breaches or coaching datasets, and AI fashions that appear to behave on hidden biases or malicious intent.

Extra regulatory our bodies—each governmental and industry-specific—are recognizing the menace AI poses and growing privateness legal guidelines and laws that instantly handle AI points. Anticipate extra regional, industry-specific, and company-specific laws to return into play within the coming months and years, with a lot of them following the EU AI Act as a blueprint for easy methods to shield shopper privateness.

Public Notion and Consciousness of AI Privateness Points

Since ChatGPT was launched, most people has developed a fundamental data of and curiosity in AI applied sciences. Regardless of the joy, basic public notion of AI expertise is fearful—particularly because it pertains to AI privateness.

Many customers don’t belief the motivations of massive AI and tech firms and fear that their private information and privateness might be compromised by the expertise. Frequent mergers, acquisitions, and partnerships on this area can result in rising monopolies, and the worry of the facility these organizations have.

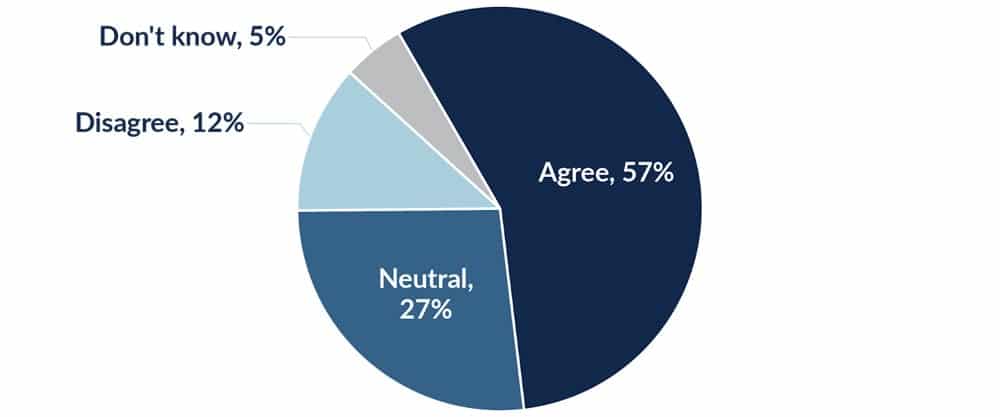

In response to a survey accomplished by the Worldwide Affiliation of Privateness Professionals in 2023, 57 % of customers worry that AI is a major menace to their privateness, whereas 27 % felt impartial about AI and privateness points. Solely 12 % disagreed that AI will considerably hurt their private privateness.

Supply: IAPP Privateness and Shopper Belief Report 2023

Supply: IAPP Privateness and Shopper Belief Report 2023

Actual-World Examples of AI and Privateness Points

Whereas there have been a number of important and extremely publicized safety breaches with AI expertise and its respective information, many distributors and industries are taking essential strides within the route of higher information protections. We cowl each failures and success within the following examples.

Excessive-Profile Privateness Points Involving AI

Listed here are a few of the most main breaches and privateness violations that instantly concerned AI expertise over the previous a number of years:

- Microsoft: Its latest announcement of the Recall characteristic, which permits enterprise leaders to gather, save, and evaluate user-activity screenshots from their units, obtained important pushback for its lack of privateness design components, in addition to for the corporate’s latest issues with safety breaches. Microsoft will now let customers extra simply choose in or out of the method, and plans to enhance information safety with just-in-time decryption and encrypted search index databases.

- OpenAI: OpenAI skilled its first main outage in March 2023 on account of a bug that uncovered sure customers’ chat historical past information to different customers, and even uncovered cost and different private data to unauthorized customers for a time period.

- Google: An ex-Google worker stole AI commerce secrets and techniques and information to share with the Folks’s Republic of China. Whereas this doesn’t essentially influence private information privateness, the implications of AI and tech firms’ staff with the ability to get this type of entry are regarding.

Profitable Implementations of AI with Robust Privateness Protections

Many AI firms are innovating to create privacy-by-design AI applied sciences that profit each companies and customers, together with the next:

- Anthropic: Particularly with its newest Claude 3 mannequin, Anthropic has continued to develop its constitutional AI method, which reinforces mannequin security and transparency. The corporate additionally follows a accountable scaling coverage to recurrently take a look at and share with the general public how its fashions are performing in opposition to organic, cyber, and different essential ethicality metrics.

- MOSTLY AI: That is considered one of a number of AI distributors that has developed complete expertise for artificial information era, which protects unique information from pointless use and publicity. The expertise works particularly properly for accountable AI and ML improvement, information sharing, and testing and high quality assurance.

- Glean: One of the common AI enterprise search options available on the market immediately, Glean was designed with safety and privateness at its core. Its options embody zero belief safety and a belief layer, consumer authentication, the precept of least privilege, GDPR compliance, and information encryption at relaxation and in transit.

- Hippocratic AI: This generative AI product, particularly designed for healthcare companies, complies with HIPAA and has been obtained extensively by nurses, physicians, well being programs, and payor companions to make sure information privateness is protected and affected person information is used ethically to ship higher care.

- Simplifai: An answer for AI-supported insurance coverage claims and doc processing, Simplifai explicitly follows a privacy-by-design method to guard its prospects’ delicate monetary information. Its privateness practices embody information masking, restricted storage instances and common information deletion; built-in platform, community, and information safety elements and expertise; customer-driven information deletion; information encryption; and using regional information facilities that adjust to regional expectations.

Greatest Practices for Managing AI and Privateness Points

Whereas AI presents an array of difficult privateness points, firms can surmount these issues through the use of finest practices like specializing in information governance, establishing applicable use insurance policies and educating all stakeholders.

Spend money on Information Governance and Safety Instruments

Among the finest options for shielding AI instruments and the remainder of your assault floor embody prolonged detection and response (XDR), information loss prevention, and menace intelligence and monitoring software program. Plenty of data-governance-specific instruments additionally exist that can assist you shield information and guarantee all information use stays in compliance with related laws.

Set up an Acceptable Use Coverage for AI

Inner enterprise customers ought to know what information they’ll use and the way they need to use it when participating with AI instruments. That is significantly essential for organizations that work with delicate buyer information, like protected well being data (PHI) and cost data.

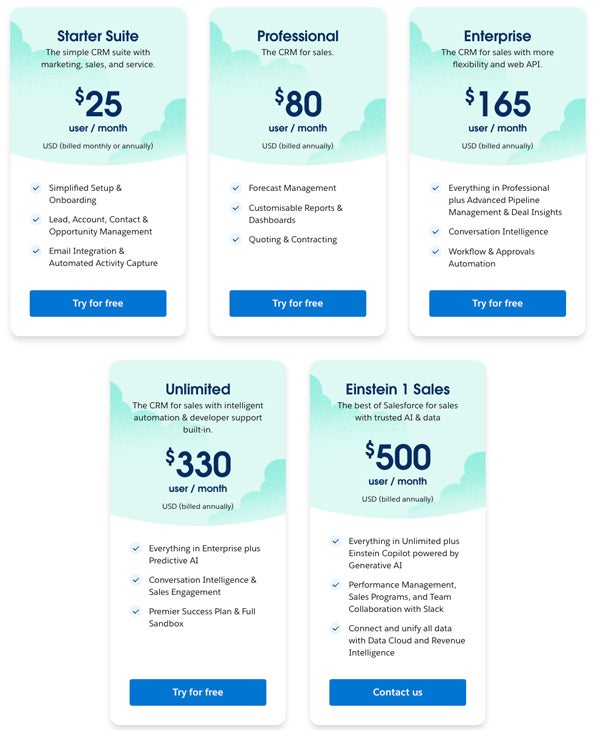

Learn the Superb Print

AI distributors usually supply some sort of documentation or coverage that covers how their merchandise work and the fundamentals of how they have been skilled. Learn this documentation rigorously to establish any purple flags, and if there’s one thing you’re unsure about or that’s unclear of their coverage docs, attain out to a consultant for clarification.

Use Solely Non-Delicate Information

As a basic rule, don’t enter your small business’s or prospects’ most delicate information in any AI device, even when it’s a custom-built or fine-tuned resolution that feels personal. If there’s a selected use case you need to pursue that includes delicate information, analysis if there’s a solution to safely full the operation with digital twins, information anonymization, or artificial information.

Educate Stakeholders and Customers on Privateness

Your group’s stakeholders and staff ought to obtain each basic coaching and role-specific coaching for the way, when, and why they’ll use AI applied sciences of their each day work. Coaching must be an ongoing initiative that focuses on refreshing basic data and incorporating details about rising applied sciences and finest practices.

Improve Constructed-In Safety Options

When growing and releasing AI fashions for extra basic use, you should put within the effort to guard consumer information in any respect phases of mannequin lifecycle improvement and optimization. To enhance your mannequin’s safety features, focus closely on information, growing practices like information masking, information anonymization, and artificial information utilization; additionally think about investing in additional complete and trendy cybersecurity device units for cover, corresponding to prolonged detection and response (XDR) software program platforms.

Proactively Implement Stricter Regulatory Measures

The EU AI Act and related overarching laws are on the horizon, however even earlier than these legal guidelines go into impact, AI builders and enterprise leaders ought to regulate how AI fashions and information are used. Set and implement clear information utilization insurance policies, present avenues for customers to share suggestions and issues, and think about how AI and its wanted coaching information can be utilized with out compromising industry-specific laws or shopper expectations.

Enhance Transparency in Information Utilization

Rising information utilization transparency—which incorporates being extra clear about information sources, assortment strategies, and storage strategies—is an effective enterprise observe all-around. It offers prospects better confidence when utilizing your instruments, it offers the required blueprints and data AI distributors must move an information or compliance audit, and it helps AI builders and distributors to create a clearer image of what they’re doing with AI and the roadmap they plan to observe sooner or later.

Cut back Information Storage Durations

The longer information is saved in a third-party repository or AI mannequin (particularly one with restricted safety protections), the extra doubtless that information will fall sufferer to a breach or unhealthy actor. The easy act of lowering information storage intervals to solely the precise period of time that’s crucial for coaching and high quality assurance will assist to guard information in opposition to unauthorized entry and provides customers better peace of thoughts after they uncover this lowered information storage coverage is in place.

Guarantee Compliance with Copyright and IP Legal guidelines

Whereas present laws for the way AI can incorporate IP and copyrighted property are murky at finest, AI distributors will enhance their popularity (and be higher ready for impending laws) in the event that they vet their sources from the outset. Equally, enterprise customers of AI instruments must be diligent in reviewing the documentation AI distributors present about how their information is sourced; when you’ve got questions or issues about IP utilization, it is best to contact that vendor instantly and even cease utilizing that device.

Backside Line: Addressing AI and Privateness Points Is Important

AI instruments current companies and the on a regular basis shopper with every kind of latest conveniences, starting from process automation and guided Q&A to product design and programming. Whereas these instruments can simplify our lives, additionally they run the chance of violating particular person privateness in methods that may harm vendor popularity and shopper belief, cybersecurity, and regulatory compliance.

It takes further effort to make use of AI in a accountable approach that protects consumer privateness, but it’s important when you think about how privateness violations can influence an organization’s public picture. Particularly as this expertise matures and turns into extra pervasive in our each day lives, it’s important to observe AI legal guidelines as they’re handed and develop extra particular AI use finest practices that align along with your group’s tradition and prospects’ privateness expectations.

For extra suggestions associated to cybersecurity, danger administration, and moral AI use in relation to generative AI, take a look at these finest observe guides:

- Generative AI Ethics: Considerations and Attainable Options

- Generative AI and Cybersecurity: Final Information

- Dangers of Generative AI: 6 Danger Administration Ideas