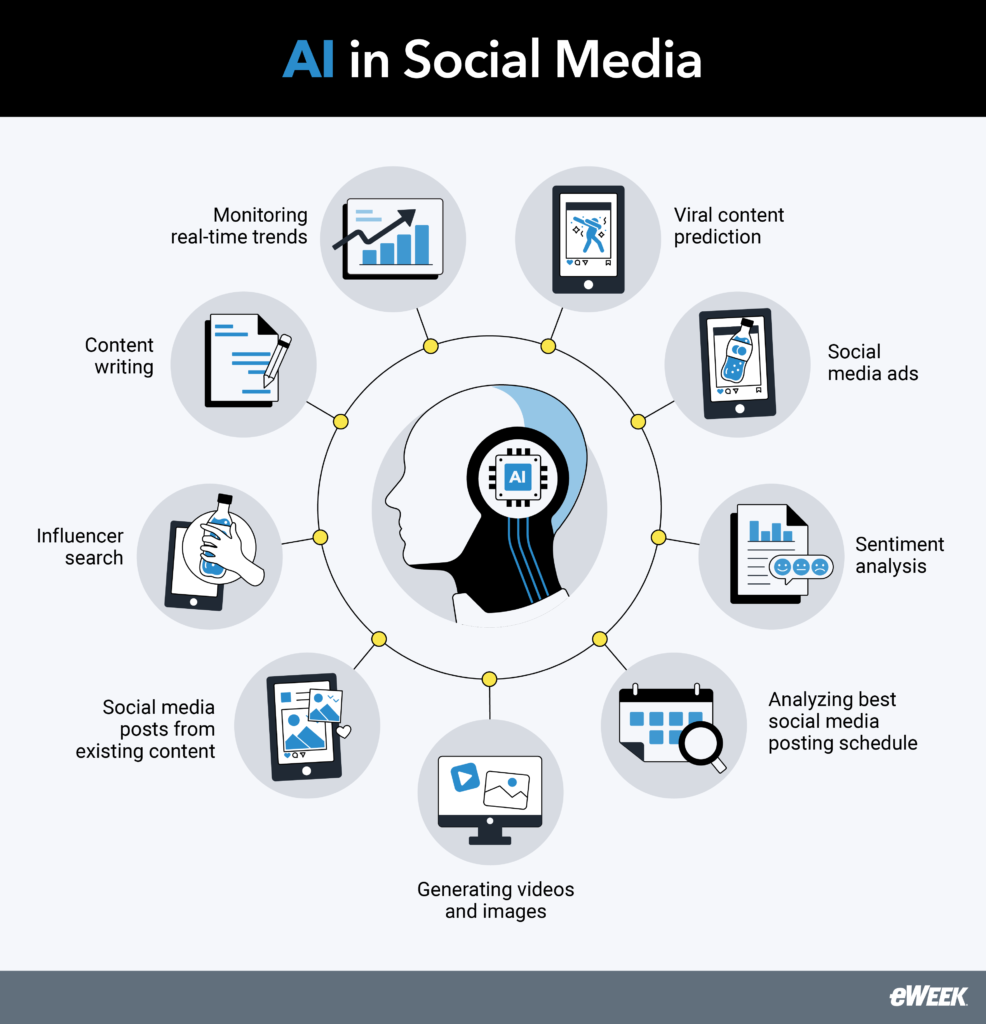

Synthetic intelligence (AI) has improved to the purpose that machines can now carry out duties as soon as restricted to people. AI can produce artwork, have interaction in clever conversations, acknowledge objects, study from expertise, and make autonomous selections, making it helpful for personalised suggestions, social media content material creation, healthcare selections, screening job candidates, self-driving automobiles, and facial recognition. The connection between AI and ethics is of rising significance—whereas the know-how is new and thrilling, with the potential to learn companies and humanity as a complete, it additionally creates many distinctive moral challenges that have to be understood, addressed, and controlled.

KEY TAKEAWAYS

- Incorporating ethics into the usage of synthetic intelligence and associated applied sciences protects organizations, their workers, and their prospects. (Bounce to Part)

- Organizations that implement AI should prioritize transparency, equity, information safety, and security. (Bounce to Part)

- Moral AI tips help organizations in creating frameworks that match native rules and requirements for AI. (Bounce to Part)

TABLE OF CONTENTS

Toggle

- The Significance of Ethics in AI

- 4 Key Moral Rules in AI

- Moral Challenges in AI

- Case Examine Examples of Ethics in Synthetic Intelligence

- Authorities Rules and Insurance policies for AI Ethics

- Different Frameworks and Tips for Moral AI

- 7 Methods To Implement Moral AI

- Backside Line: Understanding AI and Ethics

The Significance of Ethics in AI

The position of ethics in AI is to create frameworks and guardrails that be certain that organizations develop and use AI in ways in which put privateness and security first. This consists of ensuring AI treats all teams pretty, with out bias; preserving folks’s privateness by way of accountable information utilization; and holding corporations answerable for the habits of synthetic intelligence they’ve developed or deployed.

For AI to be thought of reliable, corporations have to be clear about what coaching information is used, how it’s used, and what processes AI techniques use to make selections. As well as, AI have to be constructed to be safe—notably in sectors like healthcare, the place affected person privateness is paramount. In the end, people set up ethical and moral standards for AI to make sure that it acts in accordance with our values and beliefs.

Moral AI helps organizations create belief and loyalty with their customers. It additionally helps corporations adjust to rules, scale back their authorized danger, and enhance their reputations. Moral behaviors promote innovation and progress, leading to new alternatives, and establishing the protection and reliability of AI can scale back hurt and enhance confidence in its functions.

General, moral AI fosters an equal society by preserving human rights and contributing to bigger societal advantages, not directly serving to financial success and offering customers with honest, reliable, and respectful AI techniques.

4 Key Moral Rules in AI

Following moral rules may also help be certain that AI techniques are reliable, secure, honest, and respectful of person rights, letting AI builders create a know-how that helps as many individuals as potential whereas minimizing potential dangers.

Transparency and Accountability in AI

Transparency in AI refers to being clear and open about how AI techniques work, together with how selections are made and what information is used. This transparency fosters belief by making it simpler for customers to know AI behaviors, and accountability ensures that there are outlined obligations for AI system outputs, permitting faults to be recognized and corrected. This idea implies that AI creators and customers are held accountable for any undesirable penalties.

Equity and Non-Discrimination in AI

Equity in AI means treating everybody equally and avoiding favoritism for any group. AI algorithms have to be freed from biases which may result in unjust therapy. Non-discrimination signifies that AI mustn’t base its selections on biased or unfair elements. Collectively, these rules make sure that AI techniques are cheap and equitable, treating all people equally no matter background.

Privateness and Knowledge Safety in AI

In AI, privateness refers to the necessity to hold private data confidential and safe and verifying that information is utilized in ways in which respect customers’ privateness. Knowledge safety entails defending information from abuse or theft, putting in strong safety measures to stop cyberattacks and guaranteeing that it’s only used for its meant functions. These rules promote belief by promising customers that their information is dealt with ethically and securely.

Security and Reliability in AI

Security in AI attests that techniques don’t harm folks, property, or the surroundings, which requires in depth testing to keep away from dangerous malfunctions or sudden incidents. Reliability implies that AI techniques repeatedly carry out their meant features efficiently beneath a wide range of contexts, together with managing unexpected occasions. These rules make sure that AI techniques are dependable and pose no menace to customers or society.

Moral Challenges in AI

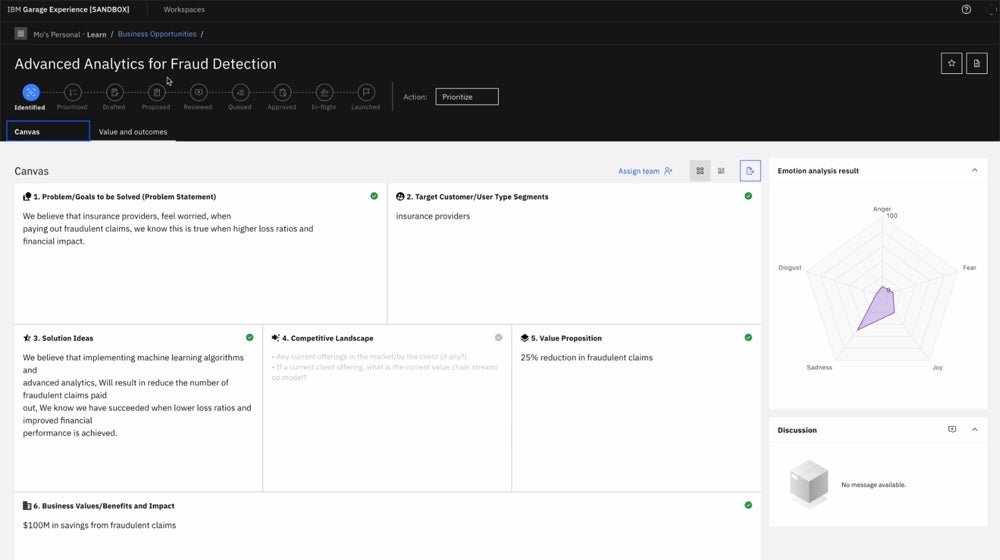

Regardless of widespread public concern about AI ethics, many companies have but to totally tackle these points. A survey performed by Conversica reported that 86 p.c of organizations which have adopted AI agree on the necessity to have clearly acknowledged tips for its applicable use. Nevertheless, simply 6 p.c of corporations have tips in place to make sure the accountable use of AI. Firms utilizing AI reported that the main points have been lack of transparency, the danger of false data, and the accuracy of knowledge fashions.

Bias in AI Knowledge

As a result of people created AI, and AI depends on information offered by people, some human bias will make its approach into AI techniques. In a really public instance, the AI prison justice device database COMPAS (Correctional Offender Administration Profiling for Different Sanctions) tended to discriminate in opposition to sure teams.

Designed to foretell a defendant’s danger of committing one other crime, courts and probation and parole officers used the system to find out applicable prison sentences and which offenders have been eligible for probation or parole. However ProPublica reported that COMPAS was 45 p.c extra more likely to assign larger danger scores to Black defendants than white defendants. In actuality, Black and white defendants reoffend at about the identical fee—59 p.c—however Black defendants have been receiving longer sentences and have been much less more likely to obtain probation or parole due to AI bias.

Whereas some bias could also be inevitable, steps needs to be taken to mitigate it. Troublesome questions stay regarding to what diploma bias have to be eradicated earlier than an AI can be utilized to make selections. Is it ample to create an AI system that’s much less biased than people, or ought to we require that the system is nearer to having no biases?

Knowledge Privateness Points

Our digital lives imply we depart a path of knowledge that may be exploited by companies and governments. Even when accomplished legally, this assortment and use of non-public information is ethically doubtful, and individuals are typically unaware of the extent to which this is happening—and would seemingly worry by it in the event that they have been higher knowledgeable.

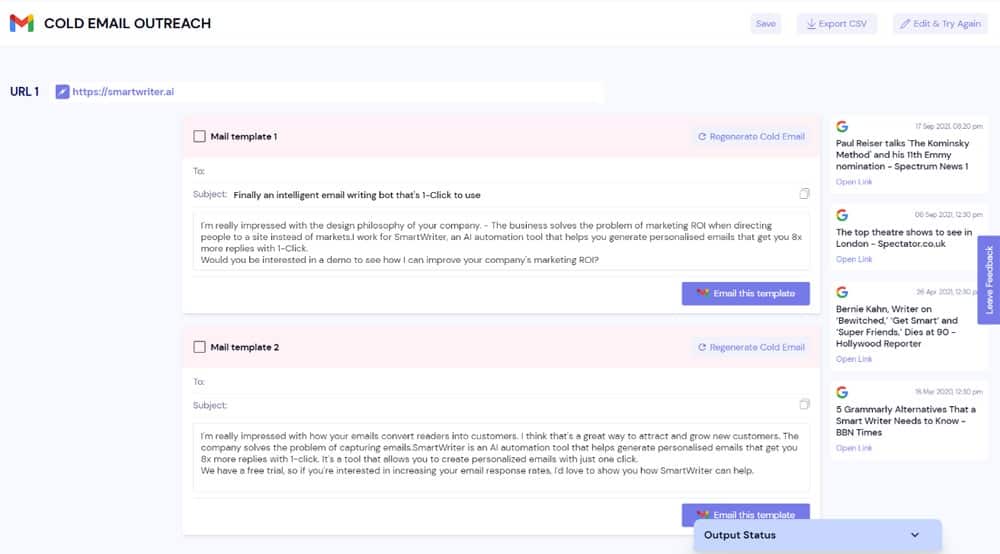

AI exacerbates all these points by making it simpler to gather private information and use it to govern folks. In some situations, that manipulation is pretty benign—akin to steering viewers to films and TV packages that they could like as a result of they’ve watched one thing comparable—however the strains get blurrier when the AI is utilizing private information to govern prospects into shopping for merchandise. In different circumstances, algorithms may be utilizing private information to sway folks’s political opinions and even persuade them to consider issues that aren’t true.

Moreover, AI facial recognition software program makes it potential to assemble in depth details about folks by scanning images of them. Governments are wrestling with the query of when folks have the suitable to anticipate privateness when they’re out in public. A couple of international locations have determined that it’s acceptable to carry out widespread facial recognition, whereas some others outlaw it in all circumstances. Most draw the strains someplace within the center.

Privateness and surveillance considerations current apparent moral challenges for which there isn’t a straightforward resolution. At a minimal, organizations must ensure that they’re complying with all related laws and upholding business requirements. However leaders additionally must ensure that they’re doing a little introspection and contemplating whether or not they may be violating folks’s privateness with their AI instruments.

Learn our article on the historical past of synthetic intelligence to study extra concerning the evolution of this dynamic know-how.

Transparency About Knowledge Use and Algorithms

AI techniques typically assist make vital selections that have an effect on folks’s lives, together with hiring, medical, and prison justice selections. As a result of the stakes are so excessive, folks ought to be capable of perceive why a specific AI system got here to the conclusion that it did. Nevertheless, the rationale for determinations made by AI is commonly hidden from the people who find themselves affected.

The explanation for that is that algorithms that AI techniques use to make selections are sometimes stored secret by distributors to guard them from rivals. Additionally, AI algorithms can generally be too difficult for non-experts to simply perceive. AI selections are sometimes not clear to anybody, not even the individuals who designed them. Deep studying, specifically, may end up in fashions that solely machines can perceive.

Organizational leaders must ask themselves whether or not they’re comfy with “black field” techniques having such a big position in vital selections. More and more, the general public is rising uncomfortable with opaque AI techniques and demanding extra transparency. In consequence, many organizations are searching for methods to convey extra traceability and governance to their synthetic intelligence instruments.

Human Legal responsibility and Accountability for AI Transgressions

The truth that AI techniques are able to appearing autonomously raises vital legal responsibility and accountability points about who needs to be held accountable when one thing goes unsuitable. For instance, this difficulty arises when autonomous autos trigger accidents and even deaths.

Normally, when a defect causes an accident, the producer is held answerable for the accident and required to pay the suitable authorized penalty. Nevertheless, within the case of autonomous techniques like self-driving automobiles, authorized techniques have important gaps. It’s unclear when the producer is to be held accountable in such circumstances.

Comparable difficulties come up when AI is used to make healthcare suggestions. If the AI makes the unsuitable suggestion, ought to its producer be held accountable? Or does the practitioner bear some duty for double-checking that the AI is appropriate? Legislatures and courts are nonetheless figuring out the solutions to many questions like these.

Self-Consciousness and Moral Obligations

Some consultants say that AI might sometime obtain self-awareness. This might doubtlessly indicate that an AI system would have rights and ethical standing much like people.

This may occasionally appear farfetched, nevertheless it’s a objective for AI scientists, and on the tempo that AI know-how is progressing, it’s a actual chance. AI has already grow to be in a position to do issues that have been as soon as thought inconceivable.

If this have been to occur, people might have important moral obligations relating to the way in which they deal with AI. Would it not be unsuitable to pressure an AI to perform the duties that it was designed to do? Would we be obligated to offer an AI a alternative about whether or not or the way it was going to execute a command? And will we ever doubtlessly be at risk from an AI?

Be taught extra about how AI is altering software program improvement with AI augmentation.

Case Examine Examples of Ethics in Synthetic Intelligence

AI is reworking quite a few industries, offering important advantages whereas posing moral challenges in such fields as healthcare, prison justice, finance, and autonomous autos.

Ethics of AI in Healthcare

In diagnostic imaging, AI algorithms are used to judge medical pictures for early detection of illnesses like most cancers. These computer systems can sometimes outperform human radiologists, however their use can increase moral considerations about affected person privateness, information safety, and doubtlessly biased outcomes if the coaching information will not be consultant. The moral concerns embrace acquiring knowledgeable consent for information use, sustaining transparency in AI decision-making, correcting biases in coaching information, and defending affected person privateness.

Ethics of AI in Legal Justice

Legal regulation addresses essentially the most dangerous actions in society, minimizing crime and apprehending and punishing perpetrators. AI applied sciences present new alternatives to drastically scale back crime and cope with criminals extra pretty and effectively by forecasting the place crimes will happen and recording them as they occur. It could actually additionally assess the chance of reoffending, helping judges in imposing appropriate sentences to maintain communication secure.

Nevertheless, the applying of AI in prison justice creates moral concerns, together with bias, privateness infringements, and the necessity for transparency and accountability in AI-driven selections. The moral use of AI elements cautious consideration of those considerations in addition to robust authorized and regulatory constructions to stop misuse.

Ethics of AI in Finance

One fascinating use of moral AI in finance is mortgage utility analysis. Monetary organizations can use AI to investigate mortgage functions extra comprehensively and pretty. Moral AI fashions can assess an applicant’s monetary well being by analyzing a broader set of knowledge factors over time, akin to fee historical past, revenue stability, and spending habits. This system helps to keep away from biases that may develop from normal credit score scoring techniques which regularly depend on restricted information factors akin to credit score scores. By assuring openness and equity in decision-making, moral AI can promote monetary inclusion and allow fairer entry to finance for underrepresented communities.

Ethics of AI in Autonomous Autos

The implementation of emergency decision-making algorithms in autonomous autos is a compelling use case for moral AI. These algorithms are meant to deal with conditions through which a collision is unavoidable and the AI should decide the right way to reduce harm. For instance, if an autonomous car is confronted with the selection of hitting a pedestrian lane or swerving and maybe endangering the passengers, the AI will use moral frameworks to make the choice.

In such circumstances, the AI weighs a wide range of parameters, together with the variety of lives at stake, the severity of potential harm, and the potential of totally different outcomes. The moral concerns underlying this decision-making course of embrace lowering hurt and guaranteeing equity.

Authorities Rules and Insurance policies for AI Ethics

Authorities regulation and insurance policies on AI ethics vary extensively, with every area creating distinct methods based mostly on political, financial, and social contexts. These approaches affect how AI is developed, deployed, and ruled with various priorities akin to innovation, ethics, privateness, and safety.

China

China leads in AI regulation, having applied tips for suggestion algorithms in 2021, deep synthesis content material akin to deepfakes in 2022, and generative AI together with chatbots in 2023. These requirements impose transparency, forestall worth discrimination, safeguard employee rights, and demand algorithm registration and safety evaluation. Nationwide requirements such because the “Governance Rules for the New Technology Synthetic Intelligence” (2019) and “Moral Norms for the New Technology Synthetic Intelligence” (2021) emphasize moral AI improvement. China’s technique values data administration, transparency, and moral consideration, influencing AI deployment each domestically and globally.

United States of America

The U.S. lacks complete AI laws and as a substitute depends on sector-specific rules governing privateness, discrimination, and security. A number of establishments—notably the Federal Commerce Fee (FTC) and the Nationwide Institute of Requirements and Know-how (NIST)—have issued tips for AI governance. Nevertheless, there’s a persevering with dialogue over the necessity for extra centralized AI governance to handle the speedy developments in moral considerations associated to AI applied sciences.

European Union

The European Union (EU) prioritizes moral AI improvement and human rights safety. The Basic Knowledge Safety Regulation (GDPR) considerably impacts how AI techniques handle information, requiring transparency and duty. As well as, the proposed AI Act seeks to higher regulate AI techniques by making certain transparency, accountability, and security, reaffirming the EU’s dedication to moral AI practices.

Canada

Canada’s guidelines and laws goal to advertise AI ethics and transparency. The Canadian AI technique promotes accountable AI analysis and improvement whereas highlighting moral concerns. The Private Info Safety and Digital Paperwork Act (PIPEDA) oversees information privateness in AI functions, requiring that non-public data be dealt with responsibly and transparently.

Asia (Excluding China)

In Asia, international locations like Japan, South Korea, and Singapore prioritize innovation, analysis, and collaboration of their AI methods. Whereas rules differ amongst these international locations, moral considerations are vital to their AI insurance policies. These nations attempt to strike a stability between innovation and moral requirements to help accountable AI improvement and deployment.

Different Frameworks and Tips for Moral AI

Frameworks and tips for moral AI present security and safety for organizations and its customers. Many governments have begun to impose moral requirements that have to be adopted to keep away from potential points.

International Initiatives and Requirements in AI Ethics

The Institute of Electrical and Electronics Engineers’ (IEEE) International Initiative on Ethics of Autonomous and Clever Programs seeks to ensure that each one events taking part within the design and improvement of autonomous and clever techniques tackle moral concerns. Their function is to advance these applied sciences for the nice of humanity utilizing the next approaches:

- Ethically Aligned Design (EAD): This doc outlines concepts for guiding moral points in AI and autonomous system design.

- IEEE P7000 Sequence Requirements: These requirements cowl the interface of know-how and ethics and promote innovation whereas taking into consideration the societal impression. Working with teams concentrate on particular points akin to openness, accountability, and bias discount.

- Ethics Certification Program for Autonomous and Clever Programs (ECPAIS): ECPAI seeks to develop certification standards that encourage openness, accountability, and justice in autonomous and clever techniques.

Company Accountability in AI and Ethics

The mixing of accountable AI practices and company duty demonstrates how vital moral concerns are for efficient AI implementation in enterprise. Adopting a sustainable and moral technique is vital, not just for ethical causes but in addition as a strategic crucial in at this time’s technologically pushed society. As organizations deploy AI, upholding moral norms will decide the way forward for know-how and solidify their standing as accountable, forward-thinking corporations. This dedication helps them to capitalize on AI’s promise whereas positively benefiting society, making certain know-how’s position as a pressure for good.

7 Methods To Implement Moral AI

The moral challenges surrounding AI are tremendously troublesome and sophisticated, and won’t be solved in a single day. Nevertheless, organizations can take a number of sensible steps towards implementing and enhancing their group’s AI ethics:

- Growing Moral AI Insurance policies: Creating moral AI insurance policies entails creating tips in order that AI techniques are clear, honest, accountable, and respectful of person privateness. Governments, business leaders, and ethicists typically work collectively to design these rules to deal with AI’s complicated moral challenges.

- Placing Moral AI into Observe: Implementing moral AI insurance policies requires changing moral concepts into actions inside a company, akin to educating stakeholders, establishing requirements, producing incentives, assembling ethics activity groups, and conducting common monitoring. These practices see that AI techniques are profitable and cling to moral requirements whereas creating belief to guard their customers’ rights.

- Elevating Moral AI Consciousness: Most individuals have both no familiarity with these points or are solely conversant in them. A great first step is to start out speaking about moral challenges and sharing articles that convey up vital concerns.

- Setting Targets and KPIs for Enhancing AI Ethics: Many of those issues won’t ever utterly go away, however it’s helpful to have an ordinary that AI techniques should meet. For instance, organizations should resolve to what diploma AI techniques should eradicate bias in comparison with people earlier than they’re used to make vital selections, they usually want clear insurance policies and procedures to make sure AI instruments meet these requirements earlier than coming into manufacturing.

- Creating Incentives for Implementing Moral AI: Workers have to be counseled for mentioning moral concerns moderately than speeding AI into manufacturing with out checking for bias, privateness, or transparency considerations. Equally, they should know that they are going to be held accountable for any unethical use of AI.

- Establishing an AI Ethics Activity Drive: The sphere of AI is progressing at a speedy tempo. A company must have a devoted group that’s maintaining with the altering panorama. This group must be cross-functional with representatives from information science, authorized, administration, and the useful areas the place AI is in use. The group may also help consider the usage of AI and make suggestions on insurance policies and procedures.

- Monitoring and Evaluating AI Programs: Steady monitoring and analysis of AI techniques are vital to vow that they meet moral requirements and carry out as anticipated. This entails evaluating AI techniques for bias, transparency, and compliance with established moral AI tips and making any mandatory modifications based mostly on these evaluations.

Backside Line: Understanding AI and Ethics

AI guarantees important enhancements in productiveness and effectivity, however this full potential can solely be realized if organizations are dedicated to moral rules. With out rigorous monitoring, AI can undermine belief and accountability. Firms must develop and cling to moral frameworks and tips to make sure that moral finest practices are adopted to guard buyer information, privateness, and firm reputations.

Be taught extra about generative AI ethics that can assist you navigate the complexities of moral AI implementation and use its full potential responsibly.